Cloud containers have seen a surge in interest in recent years, with leading users such as Google, Spotify, Uber and eBay raising their profile and new open-source technologies, such as Docker, driving their adoption.

They’ve been a shot in the arm for developers and operators because of their portability and ease of deployment. But they can also optimize your workload density, providing a more efficient way to utilize your cloud resources than standard virtual machines (VMs).

In this post, we run through some of the key concepts and features of the technology, explore their cost-saving potential and examine some of the challenges that still exist in cost and performance optimization.

What Are Containers?

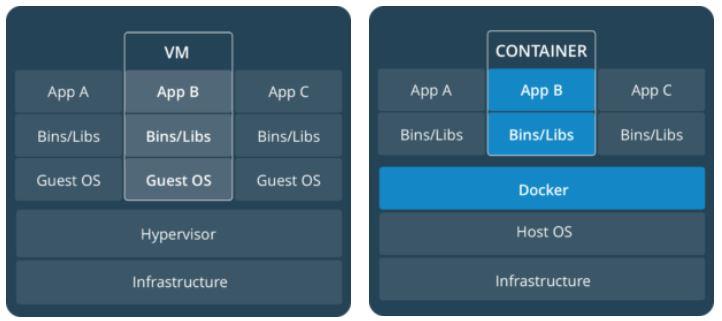

Containers are an application delivery technology that offers a lightweight alternative to VMs for smaller or distributed workloads. Just like VMs, they provide isolated environments for your applications. But, unlike VMs, they reside at host OS rather guest OS level.

This means they’re able to do away the hypervisor and instead make use of the resource isolation features of the OS kernel. As a result, without the additional layer of abstraction, they’re able to offer better performance capability than traditional VM deployments.

Moreover, they take a more efficient approach to resource allocation by sharing the kernel of the host OS with other containers. So, unlike VMs, they don’t need their own dedicated OS, thereby reducing their resource footprint.

Containers and VMs are based on different levels of abstraction.

Image source: Docker

This means that, whereas a VM-based instance hosts a single VM, you’re often able to deploy a whole series of containers to a container-based instance of the same size. Therefore, provided you use them correctly, containers can help reduce your cloud infrastructure requirements and significantly reduce your IT costs.

Container Portability

Because containers are lightweight and also carry their application dependencies with them, they’re easily transportable between different clouds. This gives you scope to use them as part of a hybrid cloud strategy. Alternatively, you can move them between different cloud providers, helping you to take advantage of the best cloud pricing and avoid vendor lock-in.

What’s more, they’re designed for stateless workloads. So you can mix and match them across different operating systems in a distributed arrangement with other microservices—without relying on each other’s dependencies.

Nevertheless, as containers rely on the host OS, you can experience compatibility issues when moving them between heterogeneous environments, such as different distributions and versions of Linux.

Leading container platform Docker initially evolved as a Linux technology. But, following the launch of Windows Server 2016, Windows now provides native support for Docker. This means it’s now possible to connect a fleet of distributed Linux and Windows containers on the same Docker network, providing huge integration potential.

In addition to standard Windows containers, Windows Server 2016 also introduced Hyper-V containers, which carry their own copy of the OS kernel. This new technology could ultimately provide the basis for container portability between Linux and Windows systems, which could provide even more cost and performance optimization opportunities in the future.

Container Security

Containers provide segregated environments for your microservices and should offer sufficient isolation to meet most security and compliance requirements. Nevertheless, your containerized processes still share the core components of the host OS, which could present challenges to the security of highly sensitive workloads.

To enhance security, you could adopt an alternative deployment method, where your containers share the guest OS of your VM rather than the host OS, as shown in the following illustration:

Two alternative container deployment methods.

Image source: Docker

This would increase the isolation level of your applications. But, owing to the additional virtualization layer, it would lower application performance at the same time.

Application Development

Containers are perfectly adapted to the modern cloud approach to application architecture based on distributed designs of loosely coupled microservices.

It’s easier to build in fault tolerance by replicating microservices across a cluster of container instances. It’s also easier to independently patch or update container-based code and system environments without affecting other services in your container network.

And because they’re also fully self-contained environments, they provide everything you need to run an application. This makes life easier for developers, who only need to worry about their code.

Container Cluster Management

Efficient scaling is another key advantage of containers over standard VM deployments, as you can fine-tune your infrastructure capacity requirements by independently replicating containerized tasks based on individual resource demand.

Scaling is usually managed by container orchestration systems, which deploy your tasks to clusters of container-based instances.

These tools play an instrumental role in ensuring application performance and getting the most out of your container clusters. They’re able to perform a variety of different functions, including:

- Provisioning your container environment

- Managing container images

- Adding or removing containers from your cluster

- Monitoring utilization and restarting unhealthy containers

- Controlling distribution of services across your cluster

The most widely used open-source container orchestration engines include Kubernetes, Mesos and Docker Swarm. AWS also offers a proprietary container management service, Amazon ECS, while Microsoft’s Azure Container Service (ACS), provides a modular solution for running the container orchestration engine of your choice.

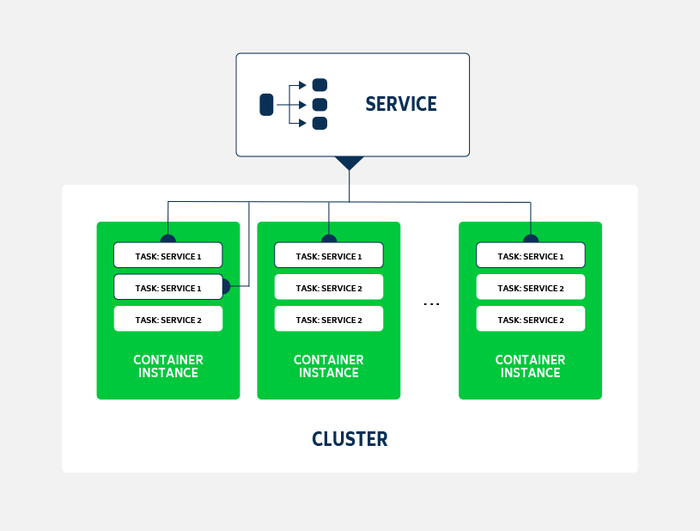

Container orchestration engines each use their own unique service concepts and cluster architecture. However, the following diagram, based loosely on the concepts used by Amazon ECS, illustrates their key components and fundamental design:

Topological representation of a container cluster

A container instance is a fixed allocation of cloud resources, just like a VM-based instance. However, it is dedicated to a group of containers rather than a VM.

Each container runs a task. This could be an in-house application component that performs a specific function or a third-party installation, such as a SQL server or Elasticsearch. A service is a group of one or more tasks performing the same function while a cluster is simply a managed group of container instances.

Lifecycle Management

Container platforms, such as Docker, use a file system layering design, which works on a similar line to version control system Git. Layering lends itself to efficient container lifecycle maintenance. This is because, each time you roll out a new feature, patch or bug fix, you only ship the changes to your application rather than the entire code. This saves storage space and network bandwidth, speeds up software updates, and makes it easier to roll back changes.

Not only that but, because you can update one container without affecting others, your lifecycle management processes will be more reliable and run more smoothly. This will become all the more important as you reduce manual control in favor of continuous integration (CI) and continuous delivery (CD) tools, such as Jenkins and CodeFresh.

Finally, infrastructure management software, such as Chef and Puppet, can also play a useful role, giving you a way to manage containers alongside traditional cloud infrastructure using the same tooling.

Performance and Cost Optimization

In addition to a reduced level of abstraction and efficient scaling, containers deliver other performance benefits.

They require little initialization compared with the process of booting up a full-blown OS on a standard VM. As a result, they can start up incredibly quickly, making your applications far more responsive to fluctuating demand.

What’s more, containers break down a single instance into multiple execution environments, providing more distributed processing capability suited to certain types of workloads.

Nevertheless, despite their clear advantages, containers present their own unique challenges to cost and performance optimization.

Balanced Cluster Configurations

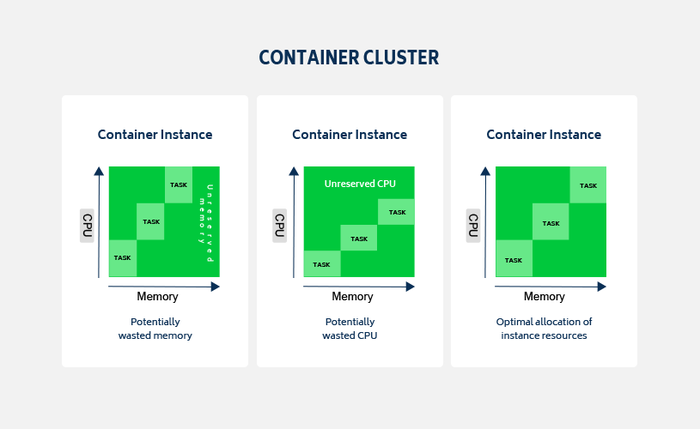

As with a VM-based instance, each container instance delivers a fixed provision of CPU and memory. At the same time, each containerized task will consume a proportion of your container instance resources. To achieve optimum workload density, your containers should make good use of all the resources available across your instance cluster.

To understand this in more detail, let’s take the following simplified example, which shows nine tasks deployed across a cluster of three containers:

An example container cluster showing two potentially underutilized instances

The optimum resource allocation for each task is represented by its own block of CPU and memory. On the first node of the cluster, the aggregated memory required by the three tasks allocated to the instance is less than the total memory available. The containers on that node can only consume this unreserved memory when they utilize more memory than their own provisioned allocation. If the memory allocated to each task is already sufficient then this unreserved capacity will go largely unutilized.

By contrast, on the second node of the cluster, the aggregated CPU required by the three tasks that are allocated to the instance is less than the total processing power available, leaving potentially wasted CPU.

On the third node, the combined CPU and memory reservation of the three tasks exploits the full resources of the container instance they’re allocated to. This represents the ideal allocation of instance resources, based on the assumption the CPU and memory reservations for each container are also optimally utilized.

In order to optimize utilization as a whole, this should be your objective for all instances across your cluster.

The above example demonstrates the fine-grained level of control that’s possible by using containers instead of standard VMs. In reality, your container configuration will be somewhat different. But the bottom line is that, through a good balance of container sizing, cluster size, container replication and choices of container instance, you can minimize your resource requirements and reduce your monthly cloud costs.

A New Level of Optimization

Cloud containers promise higher workload densities and lower costs than traditional VM approaches. But, just like VMs, they’re vulnerable to underutilization and uncontrolled proliferation of container instances.

This calls for clear visibility into your cloud environments by leveraging cost and performance monitoring tools that can help prevent container sprawl and spiraling monthly cloud costs. In addition, the more sophisticated tools can recommend container instance types and sizes that better align to your application needs.

While container optimization is a relatively new concept that takes cloud cost management to a whole new level of complexity, these are still the early days for the rapidly evolving technology. However, with the right configuration, the cost savings are potentially huge.

Want to optimize your cloud costs? Schedule a demo to see CloudCheckr CMx in action.

Cloud Resources Delivered

Get free cloud resources delivered to your inbox. Sign up for our newsletter.

Cloud Resources Delivered

Subscribe to our newsletter