Optimizing cloud costs is as important as optimizing performance. Yet is it possible to do both?

Enterprise IT infrastructure has become a dynamic and complex environment, where users can spin up new cloud resources in a matter of clicks. What’s more, you can choose from a wide range of instances, storage types, and payment mechanisms, each designed to suit different use cases and levels of resource consumption.

But the ease of spinning up resources in Amazon Web Services (AWS) and Microsoft Azure comes with a warning. Too often, simple human error can lead to costly mistakes if you’re not closely monitoring your cloud spend and utilization.

Cloud cost optimization tools within cloud management platforms can provide visibility into your cloud costs and utilization. The right toolset can help you keep your monthly cloud bills in check and reduce your management overhead. By deploying the right cost optimization solutions, you can identify inefficiencies in your cloud environment and save money on your next cloud bill.

Here are 10 common user errors that cloud cost optimization tools can help you fix:

1. Unused Instances

Quick and easy resource provisioning is one of the key advantages of on-demand pay-as-you-go cloud computing. You can order new infrastructure at the click of a button, slashing weeks or months off the time it takes to get new IT projects off the ground.

In the same way the cloud makes it easy to spin up virtual machines, it’s just as easy to forget about them. However, you pay for your cloud infrastructure whether you use it or not.

Cost optimization tools will help you address the issue of cloud sprawl. This is where an organization runs up massive bills through uncontrolled ordering of instances and other on-demand IT services — often as a result of development testing and shadow IT projects.

By keeping track of all instances in your billing accounts, cloud cost optimization tools can identify those that are inactive or unhealthy. They can then flag these instances for manual or automatic removal.

2. Unhealthy Instances

Unhealthy instances that go unnoticed don’t just waste money, but also undermine the performance of your applications. Make sure to put measures in place to identify unresponsive instances and replace them with healthy ones.

One of the most common ways to deal with unhealthy instances is to use auto scaling — a horizontal scaling feature supported by both AWS and Azure.

With auto scaling, you configure your resources into a cluster of instances dedicated to your application. Auto scaling then automatically adjusts the number of running machines in your cluster based on the conditions you define, such as whenever CPU utilization goes above or below a certain threshold.

This means you can fine-tune your cloud resources to demand. But the added benefit is that many auto-scaling services are also able to identify and replace unhealthy instances, helping you to keep wasted infrastructure to a minimum.

3. Incorrect Instance Sizing

Some enterprise cloud users still have an on-premises mindset, which causes them to provision far more capacity than they actually need.

To avoid unnecessary waste, you’ll need to analyze the resource consumption of your virtual machines to determine the correct instance sizing for optimum balance of cost and performance. This process of right sizing means implementing larger sizes, smaller sizes, or even different types of instances based on your usage to better suit your computing needs.

This is no easy challenge. A large-scale enterprise cloud environment has potentially hundreds or thousands of instances running at any one time.

Cloud cost optimization tools automatically provide instance sizing recommendations, helping you to avoid hours of manual calculations. They can also help you match your workloads to more suitable alternatives in other instance families. This could mean switching from general purpose instances to those designed for specific use cases. To save time and effort, look for solutions that can automate the right sizing process with one click.

4. Unattached Persistent Volumes

This is a particularly important housekeeping task for AWS and Microsoft Azure users. Attached volumes aren’t automatically deleted when you terminate the associated instance — unless you change your default termination policy.

Specifically, when you terminate an Amazon EC2 instance, by default, your root volume is also deleted — but any attached Amazon Elastic Block Store (EBS) volumes are still preserved. Therefore, unless you set a policy where all attached EBS volumes are automatically deleted as part of the termination process, you could end up racking up charges on unattached volumes that provide no useful purpose.

As a result, you could rack up unnecessary charges for storage you no longer need. This is even more expensive if you leave SSD-backed volumes sitting around, which cost more than double their HDD counterparts. What’s more, in the case of Provisioned IOPS SSD volumes, you continue to pay for provisioned IOPS, which is charged separately from storage.

Just as with AWS, your attached storage in Microsoft Azure isn’t automatically deleted when you terminate your virtual machine. You can identify and remove unattached volumes in the command line interfaces (CLIs) provided by both AWS and Azure. However, this can be a laborious undertaking if you have a large number of deployments across a multi-cloud environment.

You only pay for what you’re using in the cloud. But without clearing out these unattached resources, the cloud provider still considers the volumes active. Cloud inventory monitoring systems can identify and delete unattached persistent storage disks, such as Amazon EBS volumes. This ensures that you save money on your cloud bill.

5. Orphan Snapshots

Just as attached storage volumes remain after you terminate an instance, so do your volume snapshots.

While point-in-time backups on both AWS and Google Cloud Platform are incremental, the first snapshot is a compressed version of your entire disk. Depending on your retention period and how frequently you back up, you’ll need at least as much capacity again to store subsequent snapshots.

AWS backs up EBS volume snapshots to S3. What’s more, they’re both compressed and incremental, reducing your storage footprint. Nevertheless, your initial snapshot is of the entire volume. If you take regular subsequent snapshots, depending on your retention period, you could require as much capacity as the initial snapshot.

Meanwhile, Microsoft Azure, until April 2020, only supported full point-in-time snapshots which meant the storage cost of maintaining backups on Azure could be even more expensive. Make sure you are leveraging incremental snapshots and pruning old versions as desired.

Cloud cost management platforms can help you keep costs down by alerting you to orphan snapshots and providing a quick and easy way to remove them.

Webinar: Understanding FinOps Concepts

Go beyond cloud cost management and discover the value of building a FinOps practice.

Watch Now

6. Unused Static IP Addresses

Static IP addresses are a limited resource. So public cloud vendors levy a charge on unused static addresses to encourage customers to use them efficiently.

If you’re an AWS user, you get one free Elastic IP (EIP) address with each running EC2 instance. But any additional addresses come at a small hourly cost. You’re also charged for any address that’s not associated with a running instance.

On Microsoft Azure, if you use the Azure Resource Manager (ARM) deployment model then you get your first five static public IP addresses in each region for free. Likewise, if you deploy your workloads using Microsoft’s Classic (ASM) model then each of your first five reserved IP addresses is free — provided it’s associated with a running virtual machine. Otherwise it incurs an hourly charge.

However, in both deployment models, beyond your first five free addresses, each additional static or reserved IP address is also charged at a small hourly rate. Cloud cost optimization tools can help you set service limits so that you don’t go over this threshold in the first place. The bottom line is that, whatever cloud platform you use, if you longer need your unused static IPs then you should release them.

7. Data Transfer Charges

Data transfer charges often come as a major shock to new cloud customers when they receive their first monthly bill. And keeping those costs down can present significant optimization challenges.

Transferring data into your public cloud infrastructure is generally free. But transferring it out is another matter. Outbound transfer charges vary from region to region. When choosing where to host your data, you’ll need to balance cost and proximity to your customers and users.

In addition, you should consider offloading the work of handling traffic to your vendor’s content delivery network, such as AWS CloudFront and Azure Content Delivery Network. Not only can this potentially reduce overall data transfer costs, but will also speed up delivery of your data.

You should also beware of charges for data transfer between regions and zones. This is particularly important when considering the cost impact of a fault-tolerant architecture that replicates data to different locations.

And finally, bandwidth charges may vary according to what services you use. Closely examine your vendor’s charging structure and build the cheapest data transfer routes into your application architecture.

8. Underutilized Discount Capacity

Discounted alternatives to standard on-demand pricing, such as AWS and Azure Reserved Instances, can significantly reduce your overall cloud expenditure. These are pricing constructs rather than actual running instances. Therefore, they don’t necessarily require any direct operational management of your cloud infrastructure.

Reserved Instances can deliver savings in excess of 70%, where the exact discount depends on:

- The type of instance and payment terms you choose

- The flexibility you require, such as the ability to change the specification of the running instances applicable to your purchases

- How well you utilize your reservations, by ensuring you have matching instances consuming your Reserved Instance credits as much of the time as possible

AWS Reserved Instances can immediately reduce your bill if you choose to purchase them without any upfront payment. More advanced cost optimization tools provide support for discount mechanisms, such as Reserved Instances. For example, they can offer exchange suggestions for those Reserved Instances with built-in flexibility. This ensures reservations are better aligned to the resource consumption patterns of your applications.

Cloud cost optimization tools can also work the other way: by showing you where credits aren’t being used. They can then make recommendations on how to rebalance your workloads so they’re covered by your unallocated reservations. Furthermore, they can alert you to reservations that are about to expire, identify workloads that could benefit by a switch from on-demand to RI pricing, and make RI purchase recommendations.

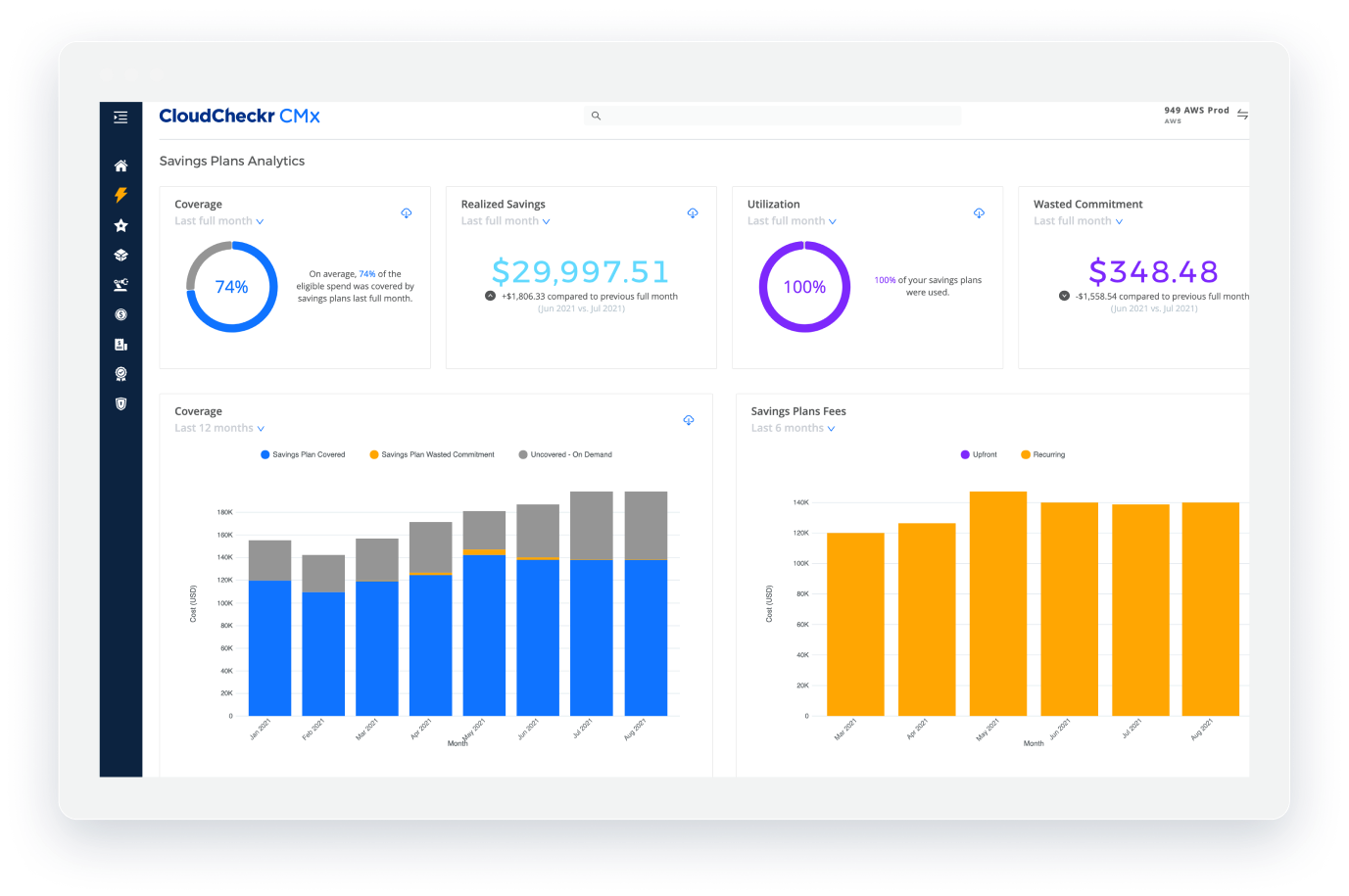

Taking advantage of other discounts, like AWS Savings Plans, can also help lower your monthly cloud bill. Similar to Reserved Instances, these require IT decision makers to plan their expenses either one or three years in advance before they sign the contract. However, you are committing to a dollar spend, hour-by-hour, as opposed to specific instance types. Regardless, to retain the pay-as-you-go benefits of the cloud, you’ll need cloud cost optimization tools that help you get the most from these long-term commitments.

9. Untagged or Inconsistently Tagged Resources

Labeling cloud resources using tagging can help you gain visibility into your cloud resources, better allocate costs, and manage cloud operations. However, when tags aren’t properly managed, it’s easy to lose sight of what’s going on in your cloud.

Following tagging best practices early and often are the best ways to get a handle on your cloud costs. Some of these include remembering case sensitivity. Most platforms won’t alert you if you create two tags in different cases (e.g., “Production” and “production”), nor will they alert you to duplicates or similar tags used for the same purposes.

For example, you might be using several tags with keys like Environment, Env, env, and environment with respective values of Production, Prod, prod, and production. By using tag mapping, a feature found in some cloud cost management platforms, you can map all of these duplicates to a consistent tag, eliminating confusion and potential cost inefficiencies. Similarly, a cloud management platform can also help you spot untagged resources and map them to the correct key/value.

The more accurate your tag mapping, the more granular and accurate your data. This, in turn, brings you more visibility into your cloud costs and resource utilization.

10. No Checklists in Place

Cost optimization works best when you know what you’re looking for. After all, if you don’t know the problem exists, how can you fix it?

While native tooling by cloud providers offer some foundational best practice checks, more sophisticated cost optimization solutions can help by performing deeper best practice checks. These checks point to potential resource waste in your cloud environment. For example, they can highlight redundant static IP addresses, unused provisioned storage capacity, and idle load balancers. When added together, these can substantially increase the number of unnecessary charges in your monthly cloud bill.

In addition to your core cost management responsibilities, you can implement other measures to streamline cloud infrastructure costs. Rather than searching for these issues manually, a cloud management platform can offer suggestions for savings all in one place.

The longer you leave cloud inefficiencies unattended, the more quickly your costs mount up. By leveraging the automation features of cost optimization tools, you can set instant responses to misconfigurations, nipping them in the bud as soon as they arise. Automating your cost optimization best practice checks helps you keep costs in constant check 24/7. Automation can reduce or eliminate manual procedures. This, in turn, frees up your operations teams to focus on other aspects of running your cloud.

Measuring Success with Cloud Cost Optimization Tools

Success in the cloud relies on continuous optimization decisions. And the longer you put them off, the more your costs will continue to pile up. So start taking action straight away.

Tag your resources so you can keep track of them. Monitor your deployments. Get a deeper understanding of how your public cloud platform works. And use cloud cost optimization tools that give you visibility and a rationalized view into your complex cloud environment.

To see real success with cloud cost optimization, look beyond your cloud vendor’s native monitoring tools. Third-party cost tracking services developed with enterprise needs in mind offer additional functionality, such as spending forecasts, cost allocation, and multi-cloud capabilities.

Above all, cloud management offers a deeper level of insight that large-scale organizations need to manage their complex cloud environments — and a level of control that any cost-aware enterprise cannot afford to do without.

Gain Actionable Insights for Immediate Savings

See how CloudCheckr can help you get your cloud costs under control.

Schedule a Demo

Cloud Resources Delivered

Get free cloud resources delivered to your inbox. Sign up for our newsletter.

Cloud Resources Delivered

Subscribe to our newsletter